Imagine I have three dice, a red one, a blue one and a green one. I will perform five experiments. First note the following. Imagine an experiment has $n_1$ elementary outcomes. Another experiment has $n_2$ elementary outcomes, and so on, up to $n_N$ elementary outcomes for the $N$th experiment. Imagine further that all $N$ experiments are independent of each other - the easiest way to imagine this is to picture all $N$ experiments happening at exactly the same time (for example all $N$ dice rolled at the same time. Now switch your attention to the combined outcome possibilities of another experiment which is nothing other than the collection of all $N$ independent experiments just mentioned. This new super-experiment has $n_1 \times n_2 \times ... \times n_N$ elementary outcomes. In the general case, no two experiments need to be the same in any way. For example, we could combine the tossing of a die with the flipping of a coin. $n_1 =6, n_2=2$ so the combined experiment has $n_1 \times n_2 = 6 \times 2 = 12$ elementary outcomes. Nor does each experiment's elementary outcomes need to be equi-probable. Imagine a loaded die with probabilities for pips respectively $ \left\{ \frac{1}{6}, \frac{1}{6}-\frac{1}{100}, \frac{1}{6}-\frac{2}{100}, \frac{1}{6}-\frac{3}{100}, \frac{1}{6}-\frac{3}{100}, \frac{1}{6}+\frac{1+2+3+4}{100}\right\}$. If you combine it with a loaded coin, whose probabilities for H and T respectively are $\left\{ \frac{49}{50}, \frac{51}{50}\right\}$, you'd still be entitled to claim that the joint experiment had 12 elementary outcomes. Of course, when the experiments you're in the process of repeating or parallelising all have the same number of elementary outcomes $n$, then the combined experiment has $n^N$ elementary outcomes.

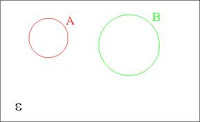

The first batch of three up are experiments where the order/colour is important. Namely a red $1$ followed by a blue $2$ does not amount to the same thing as a blue $1$ and a red $2$.

Experiment 1

I toss the red die. This has information content $I = \sum_6 \frac{1}{6} \log_2 \frac{1}{6} = 2.6$ bits

Experiment 2

I toss the red die and the blue one. Now $I = \sum_36 \frac{1}{36} \log_2 \frac{1}{36} = 5.2$ bits

Experiment 3

I toss the red die and the blue one and finally the green one. This has information content $I = \sum_{6^3} \frac{1}{6^3} \log_2 \frac{1}{6^3} = 7.8$ bits

Each new die adds another $2.6$ bits of information. Next up are repeats of experiments 2 and 3 where I don't care any more about colour. I'll manually create the equivalence classes this time but in another post I'll do it via combinatorics.

Experiment 4

Be very careful here. There are two analyses of pair/no-pair equivalence classes, both of which are valid in general, but only one of which is valid for my current purposes. To highlight the danger, I'll show you the wrong analysis first.

- Of the 36 elementary outcomes, I can see two equivalence classes: pairs and non-pairs. There are only 6 pairs. An example of one member of the non-pairs equivalence class: {Red 1 + Blue 2, Blue 1 + Red 2}. Each non-pair equivalence class has two members, so its probability must be $\frac{1}{36} + \frac{1}{36} = \frac{1}{18}$. If each non-pair equivalence class eats up two elementary outcomes, then there must be only 15 of them $(36-6)/2$. So $I = \frac{6}{36} \log_2 \frac{6}{36} + \frac{15}{18} \log_2 \frac{15}{18} = 0.65$ bits. Wrong! The mistake here is I buried all pairs together. Hence I turned my two-throw into an experiment with only two (highly unbalanced) meaningful outcomes. Even tossing a fair coin reveals more information than this.

- I calculate the information content of a typical member of each of these equivalence classes. Then I multiply each of these two representative information counts by their equivalence class size. Mathematically, the equivalence class sizes never appear in the log. So $I= 6\times I_{PAIR} + 15 \times I_{NO PAIR}= 4.3$bits.

This makes sense. Throwing away some of the information puts this scenario somewhere between tossing one die and tossing two coloured dice.

Experiment 5

Experiment 5

I'm already expecting the result of experiment 5 to be somewhere between $5.2$ and $7.8$ bits just by analogy to what I learned in experiment 4. I'm going to try to work out the equivalence classes without combinatorics, which as you'll see is a pain. Actually, the pain is not in the arithmetic, but in the mental accounting for the equivalence classes - a pain you still have when you switch the arithmetic to combinatorics. Anyway, we know there are $6^3=216$ elementary outcomes. There are three categories of equivalence class: triplets (T), all different (D), two pairs and a single (P). Note the lesson learned from 4.1 above. I'm not saying there are 3 equivalence classes, just 3 categories of equivalence class.

First, I'd like to know how the $216$ elementary outcomes get divided up among the three categories of equivalence class. The triplets are easy. I expect 6 elementary outcomes in T. Also easy is D. I have a free choice (from 6) for the first die, then 5 choices for the second and finally 4 for the third, making a total of 120 in the pot. I'm just going to imply the last pot size - P must see 90.

Next I take typical examples of each category of equivalence class and calculate their information value.

$P(D)=6 \times \frac{1}{6^3}$ $P(T) = \frac{1}{6^3}$ $P(P)=3 \times \frac{1}{6^3}$

I manually worked out the number of permutations for P $\left\{112,121,211\right\}$ and D $\left\{123,132,213,231,312,321\right\}$.

Now, for D, if I've already accounted for 6 of the 120 elementary outcomes in D, then I should expect to see $\frac{120}{6}=20$ more like that. Similarly I should expect to see $\frac{90}{3}=30$ more of the Ps. $I = 6 \times I_T + 20 \times T_D + 3 \times I_P = 5.7$ bits.

First, I'd like to know how the $216$ elementary outcomes get divided up among the three categories of equivalence class. The triplets are easy. I expect 6 elementary outcomes in T. Also easy is D. I have a free choice (from 6) for the first die, then 5 choices for the second and finally 4 for the third, making a total of 120 in the pot. I'm just going to imply the last pot size - P must see 90.

Next I take typical examples of each category of equivalence class and calculate their information value.

$P(D)=6 \times \frac{1}{6^3}$ $P(T) = \frac{1}{6^3}$ $P(P)=3 \times \frac{1}{6^3}$

I manually worked out the number of permutations for P $\left\{112,121,211\right\}$ and D $\left\{123,132,213,231,312,321\right\}$.

Now, for D, if I've already accounted for 6 of the 120 elementary outcomes in D, then I should expect to see $\frac{120}{6}=20$ more like that. Similarly I should expect to see $\frac{90}{3}=30$ more of the Ps. $I = 6 \times I_T + 20 \times T_D + 3 \times I_P = 5.7$ bits.

Throwing three dice unordered turns out to be not much different to throwing two dice ordered. Experiment 5 has also demonstrated something else - this manual method of working out permutations is un-scalable. Combinatorics is just that - an industrial strength mathematical power tool for coping with larger and larger numbers of combinations and permutations.