Who does economic man care about? 'Himself' is probably most people's first thought. Rightly so. But can we come up with a better answer? Or perhaps it is an answer to the related question: who ought economic man care about? Clearly this is a moral question.

What kinds of different answer could there be? To answer this I'll make reference to two dimensions of analysis from the subject of semiotics as laid out by its originator

Saussure, namely elaboration of the meaning of a sign along the syntagmatic and the paradigmatic dimensions.

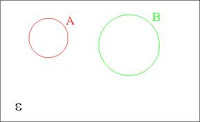

When considering how widely or narrowly economic man could cast his net, we can fix the widest and narrowest point. The narrowest point sees economic man making his calculations based on his current set of desires and feelings, with no consideration to the economic man he will become in the future. This I'll call the minimal or instantaneous economic man.

The widest point has each and every economic agent consider all humans who ever lived, who are living, or who ever will live. This is a quasi-spiritual position and would clearly be hard to implement, but implementation cost has never been the subject of concern for the founders of the model of economic man. Compare some major world religions, for example, in the scope of their caring - Christianity seems to cover the moment, Shinto covers the moment and the past, Buddhism covers the moment and the future (it's even extended beyond humanity itself). I'll call this model the congregation of economic brothers.

It seems strange to step into what has formerly been the realm of religion and morality but I'm thinking of a cold hard piece of formal mathematics, and equation, which (somehow) has to try to capture this concern. This piece of mathematics, this set of equations, could themselves be the inspiration of just as many fruitful directions of intellectual advancement as did the mathematical formalisation of the economic man of classical Ricardian economics (classical economic man).

Comte came closest to this in the recent humanist intellectual tradition in his '

living for others' dictum, including all currently living humans, but excluding antecedents and descendants.

If these are our intellectual boundaries in this search, how do we execute that search. Saussure extended the linguistic observation of words (signs) having a meaning or relevance by virtue of where they are placed in the sentence (the syntagmatic dimension) and by virtue of which word (sign) was chosen for this sentence, compared to the other words which could have been chosen in that place (the paradigmatic dimension).

All it takes is for instantaneous economic man to become interested in his future self and we arrive pretty quickly at classical economic man.

It is in theory possible to consider a bizarre model of economic man where he doesn't consider his own utility but someone else's - perhaps randomly chosen. I'll call this the economic surrogate.

If this surrogate relationship embodied a permanent fix to the other, then surely a world of economic surrogates would look like the world of classical economic man, except one where the surrogate/beneficiary could be considered to be classic economic man himself, with the additional detail that he outsourced the calculation to his 'slave'. Again, the implementation of this scheme might be well-nigh impossible due to the unlikelihood of the decider knowing much about the beneficiary's motivations or perhaps even preferences If, on the other hand, the random association to the other beneficiary was itself becoming randomly attached from one to another beneficiary, then perhaps its macro-effect wouldn't be too different from a congregation of economic brothers.

There's a more natural sounding direction toward which our utility maximiser widens his scope of caring - towards the family. (Or the household, to give it a more familiar ring). Clearly many parts of economics are happy to consider meaningful aggregations of agent - households, firms and governments being three obvious aggregations. I'll call economic man who cares about maximising the utility of his family the economic father.

Push this concern out beyond the set of descendants he's likely to meet to all of his descendants, and you're well on the way to the congregation of economic brothers again. There's no point in considering predecessors since they don't make decisions and don't need a utility maximising machine. However, households and families, while often being co-existent, don't always in the general case need to be - households can consist in many non-family members. Let's accept that the economic father can have

genetic and

co-habitee elements.

You'll have noticed that, aside from the computational complexity of these 'maximising N utility functions' approaches, you also have the practical difficulty of really knowing the preferences of all other others. This isn't fatal, I don't think, since there are reasonable philosophical arguments for questioning infallibly introspecting one's own preference set.

Just as soon as you start maximising N utility functions you begin to wonder if all N utility functions are equally important to you. In more artificial permutations of economic man (surrogate, congregation) implicit is a thought that each time you get assigned to a surrogate, or if you're in the congregationalist model, the weightings are fair (in the mathematical sense).

But for the economic father it might be quite acceptable to weight closer relatives or co-habitees with higher fractions of your own brawn towards the goal of maximising their happiness. If in the limit you weight your own utility with 1.0 and everyone else of the N-1 with 0, you're back to classical economic man.

An exponentially declining weighting could be imagined, whereby everyone in your utility-maximising team could be ranked and placed on that list. That way you can apply discount factors to future possible generations.